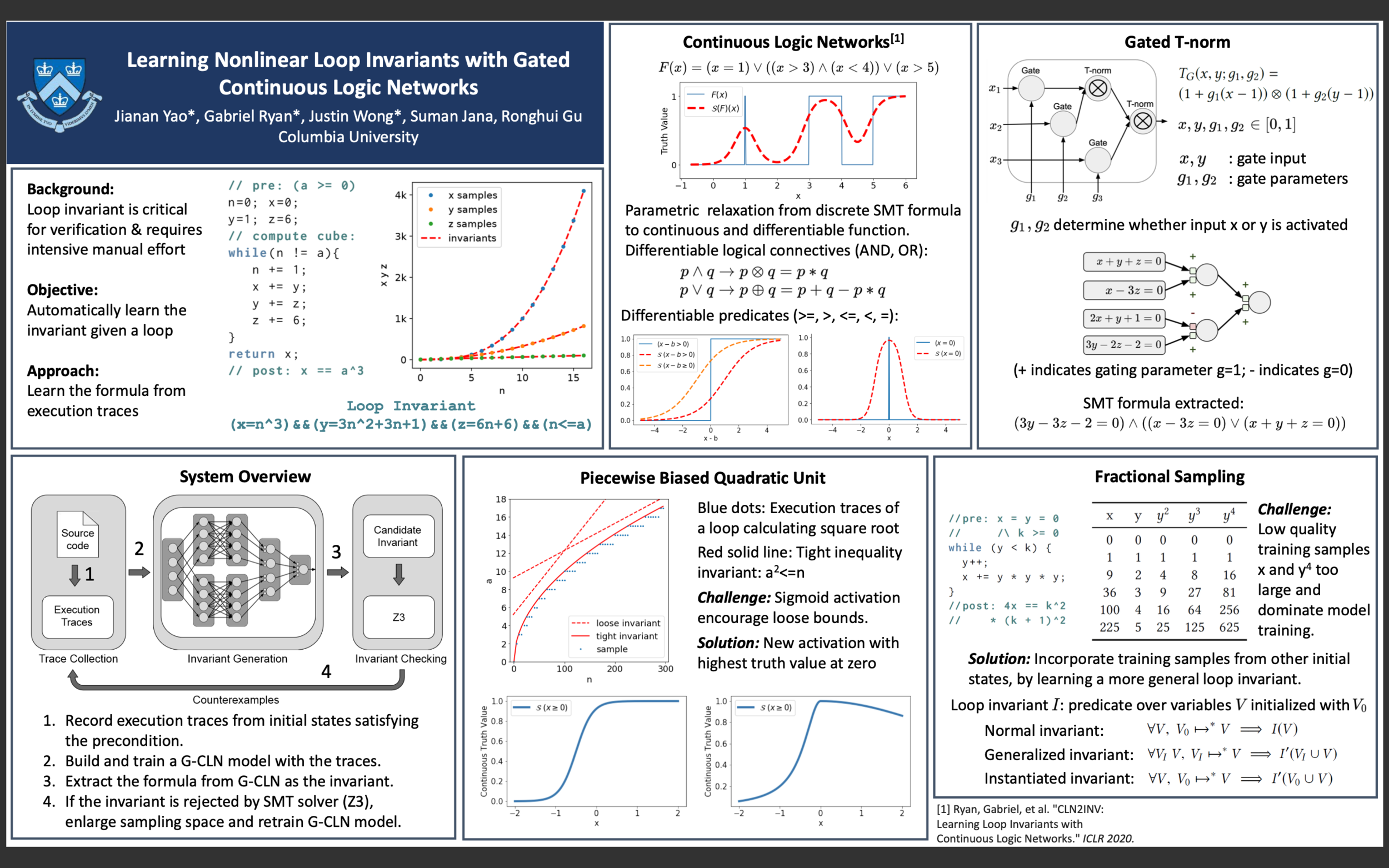

Learning Nonlinear Loop Invariants with Gated Continuous Logic Networks

Video

Team Information

Team Members

Jianan Yao, PhD Student, Department of Computer Science, Columbia Engineering

Faculty Advisor: Ronghui Gu, Tang Family Assistant Professor of Computer Science, Columbia Engineering

Abstract

Verifying real-world programs often requires inferring loop invariants with nonlinear constraints. This is especially true in programs that perform many numerical operations, such as control systems for avionics or industrial plants. Recently, data-driven methods for loop invariant inference have shown promise, especially on linear loop invariants. However, applying data-driven inference to nonlinear loop invariants is challenging due to the large numbers of and large magnitudes of high-order terms, the potential for overfitting on a small number of samples, and the large space of possible nonlinear inequality bounds.

In this work, we introduce a new neural architecture for general SMT learning, the Gated Continuous Logic Network (G-CLN), and apply it to nonlinear loop invariant learning. G-CLNs extend the Continuous Logic Network (CLN) architecture with gating units and dropout, which allow the model to robustly learn general invariants over large numbers of terms. To address overfitting that arises from finite program sampling, we introduce fractional sampling---a sound relaxation of loop semantics to continuous functions that facilitates unbounded sampling on the real domain. We additionally design a new CLN activation function, the Piecewise Biased Quadratic Unit (PBQU), for naturally learning tight inequality bounds. Contact this Team

Team Contact: Jianan Yao (use form to send email)